Telemetry, within the context of DevOps, refers to the automated collection, transmission, and analysis of data from various components of a software system. This data-driven approach is fundamental in monitoring the health, performance, and security of applications and infrastructure. By leveraging telemetry, organizations can gain real-time insights into the functioning of their systems, making it an indispensable element of modern DevOps practices.

The process of telemetry involves gathering detailed metrics and logs from servers, applications, databases, and network devices. This data is then transmitted to a central location where it can be analyzed to identify patterns, detect anomalies, and provide actionable insights. The insights gleaned from telemetry data enable DevOps teams to preemptively address potential issues, thus ensuring system reliability and minimizing downtime.

The importance of telemetry in DevOps cannot be overstated. One of the primary benefits is the early detection of issues. By continuously monitoring the system, telemetry helps in identifying performance bottlenecks, security vulnerabilities, and other anomalies before they escalate into significant problems. This proactive approach not only enhances system stability but also improves user experience by reducing the incidence of unexpected outages.

Moreover, telemetry contributes to improved system reliability. With continuous monitoring and real-time data analysis, DevOps teams can quickly diagnose and resolve issues, leading to more stable and resilient systems. This reliability is crucial for maintaining user trust and ensuring seamless operation of business-critical applications.

Another significant advantage of telemetry is the enhancement of decision-making capabilities. By providing a comprehensive view of the system’s performance and health, telemetry data enables informed decisions regarding system optimization, resource allocation, and future development. This data-driven decision-making process is key to achieving operational excellence and driving continuous improvement in DevOps practices.

In essence, telemetry serves as the backbone of effective DevOps strategies, offering invaluable insights and fostering a proactive approach to system management. Its role in early issue detection, improved reliability, and informed decision-making underscores its importance in the ever-evolving landscape of software development and operations.

Key Components of Telemetry in DevOps

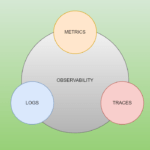

Telemetry in a DevOps environment revolves around three core components: metrics, logs, and traces. Each of these elements plays a crucial role in capturing and analyzing different facets of system performance and behavior, thereby ensuring comprehensive monitoring and actionable insights.

Metrics provide quantitative data points that reflect the state of the system over time. These could include CPU usage, memory consumption, and application response times, among others. Metrics are typically collected at regular intervals and are instrumental in identifying trends or anomalies within the system. Tools like Prometheus and Grafana are commonly used for gathering and visualizing metrics data in real-time.

Logs offer a detailed chronological record of events that occur within the system. They are invaluable for troubleshooting and understanding the sequence of actions leading up to an issue. Logs capture granular details such as error messages, user activities, and system states. Technologies like Elasticsearch, Logstash, and Kibana (ELK Stack) or Fluentd and Fluent Bit are often employed to collect, aggregate, and analyze log data efficiently.

Traces track the lifecycle of a request as it propagates through various components of a distributed system. They provide insights into the interactions between different services, helping to pinpoint latency issues and performance bottlenecks. Distributed tracing tools like Jaeger and Zipkin are widely used to capture and analyze trace data, facilitating a deeper understanding of system dependencies and performance.

Collecting and processing these telemetry components require a unified approach to ensure seamless integration and comprehensive monitoring. Tools like OpenTelemetry offer a standardized framework for collecting metrics, logs, and traces, enabling consistent data collection across diverse environments and technologies.

Despite its advantages, implementing telemetry in DevOps comes with challenges. The sheer volume of data generated can be overwhelming, necessitating effective data management and storage solutions. Ensuring data integration across various tools and maintaining data quality are additional hurdles that teams must navigate. Overcoming these challenges is crucial for leveraging telemetry to its full potential, ultimately enhancing system reliability and performance.

Telemetry vs. Observability

In the realm of DevOps, the terms “telemetry” and “observability” are frequently used interchangeably. However, they have distinct meanings and serve different purposes. Understanding these differences is crucial for effectively monitoring and maintaining the health of complex systems.

Observability refers to the capability to infer the internal state of a system based on the data it generates. It is a comprehensive concept that includes various types of data such as metrics, logs, and traces. Metrics provide quantitative measures of system performance, logs offer detailed event records, and traces follow the journey of requests through the system. These components collectively contribute to a holistic view of the system’s behavior and health.

Telemetry, on the other hand, is a subset of observability. It specifically focuses on the collection, transmission, and storage of data generated by the system. Telemetry involves gathering real-time data from various sources within the system and transmitting it to a centralized location for analysis. This data collection is essential for enabling observability, as it provides the raw information needed to assess the system’s state.

While observability aims to provide actionable insights into a system’s performance and issues, telemetry ensures that the necessary data is available for this analysis. In essence, telemetry is the mechanism that feeds data into the observability processes. Without effective telemetry, achieving true observability would be challenging, as there would be insufficient data to analyze.

In summary, while telemetry and observability are closely related, they are not synonymous. Telemetry is concerned with data collection and transmission, whereas observability encompasses a broader scope, including the analysis and interpretation of collected data. Together, they form a robust framework for monitoring, diagnosing, and improving system performance in a DevOps environment.

Top Telemetry Technologies in DevOps

In the realm of DevOps, effective telemetry is crucial for monitoring, diagnosing, and optimizing software performance. Among the leading telemetry technologies, Prometheus, the ELK Stack, and Jaeger stand out for their robust features and seamless integration capabilities.

Prometheus is a widely-adopted open-source system for metrics collection and monitoring. Designed for high scalability, Prometheus can ingest millions of data points per second, making it ideal for large-scale applications. Its powerful querying language, PromQL, provides flexibility in data analysis, while its extensive integration with platforms like Kubernetes, Grafana, and other DevOps tools enhances its utility. Prometheus excels in providing real-time insights and alerts, although users may find its learning curve steep initially.

The ELK Stack—comprising Elasticsearch, Logstash, and Kibana—is another cornerstone of telemetry in DevOps. Elasticsearch is a search engine that indexes and stores log data, Logstash processes and transforms these logs, and Kibana provides an intuitive interface for visualizing data. Together, they offer a comprehensive log management solution. The ELK Stack is highly scalable and flexible, capable of handling large volumes of log data from various sources. However, its complexity can sometimes pose challenges, particularly in terms of configuration and resource consumption. Nonetheless, its powerful search capabilities and visualizations make it a preferred choice for many enterprises.

Jaeger, an open-source tool for distributed tracing, helps in monitoring and troubleshooting microservices-based applications. Jaeger traces the path of requests through various services, providing insights into latency issues and performance bottlenecks. It integrates well with other telemetry tools and platforms like OpenTelemetry, Prometheus, and Grafana, offering a cohesive monitoring solution. Jaeger’s ease of use and ability to handle high-throughput environments make it a valuable asset in modern DevOps practices.

When comparing these technologies, Prometheus stands out for real-time metrics and alerting, the ELK Stack for comprehensive log management, and Jaeger for detailed tracing of microservices. Each tool has its strengths: Prometheus is best for real-time monitoring, the ELK Stack for deep log analysis, and Jaeger for tracing and diagnosing complex interactions. Scalability and cost vary across these tools, with open-source options generally offering lower costs but requiring more configuration and management resources.

Emerging trends in telemetry include the increasing adoption of OpenTelemetry, a unified standard for collecting, processing, and exporting telemetry data. This trend reflects a shift towards more integrated and standardized approaches to observability in DevOps, promising to simplify the telemetry landscape significantly.

Best Practices for Implementing Telemetry in DevOps

Effective implementation of telemetry in a DevOps environment begins with the clear definition of goals and metrics. Establishing precise objectives helps in aligning telemetry efforts with business outcomes, ensuring that the collected data is both relevant and actionable. Metrics should be tailored to the specific needs of the application and infrastructure, focusing on aspects like performance, reliability, and user experience.

Designing a scalable and resilient telemetry architecture is paramount. A well-thought-out architecture can handle varying loads and adapt to growing data volumes without compromising on performance. This involves leveraging distributed systems and cloud-native technologies that offer flexibility and robustness. Data should be collected from multiple sources, aggregated efficiently, and stored in a way that facilitates easy retrieval and analysis.

Selecting the right tools and technologies for telemetry is another critical step. It is essential to evaluate tools based on specific requirements such as integration capabilities, ease of use, and support for different data types. Factors like budget constraints and existing tech stack should also influence the choice of tools. Popular telemetry tools in the DevOps ecosystem include Prometheus, Grafana, and ELK Stack, each offering unique features to cater to diverse needs.

The role of automation in telemetry cannot be overstated. Integrating telemetry with CI/CD pipelines ensures that monitoring and logging are consistent and automated across all stages of the development lifecycle. Automated telemetry helps in early detection of issues, enabling quicker resolution and minimizing downtime. Tools like Jenkins, GitLab CI, and CircleCI can be used to embed telemetry checkpoints in the deployment process.

Ongoing monitoring, analysis, and optimization are crucial to maintaining an effective telemetry system. Continuous evaluation of collected data helps in identifying patterns, uncovering anomalies, and driving improvements. Regularly updating monitoring dashboards and alerting mechanisms ensures that the system remains responsive to new challenges and evolving business requirements. Engaging in periodic reviews and adopting a proactive approach to optimization fosters a culture of continuous improvement, pivotal in a dynamic DevOps environment.